It all started because I wanted to follow this tutorial - creating your own character-level GPT - and train on a dataset more interesting than Shakespeare. A stroke of genius: I could use my ~2 million words worth of diary entries.

2 million words, that seems like a lot? Well, I have Memory Hoarding OCD (mhocd) aka the neurological compulsion to write everything down. Memory hoarders believe it is of upmost importance to encode every detail of their human experience.

Pay attention to that internal monologue going on in your head right now. If there was some way to rigorously transcribe it, you would have a dataset near 1-to-1 with your mind. Without getting too philosophical, you probably get the gist of what I’m saying. So I continued forth, hoping for some kind of psuedotherapy.

In the deepest hour of the night, confess to yourself that you would die if you were forbidden to write. And look deep into your heart where it spreads its roots, the answer, and ask yourself, must I write? - Rainer Maria Rilke

training

Using pytorch, numpy and 12 hours of training on a laptop, the model is able to ‘dream’ sentences by predicting the most likely next character.

1 | She holds me every on mt dinner word to something I f*ck right a milk I must want to no feel about this stuff like ur whole quickly detail adorable in bedroom with why Just deserve that did then. you Feel someone like those fring from whole excited to be the tell her personality about her friends it gets so deeplying into the reminder. Be hot. Actually get drying to Oat and I could drive the places with her. Aight the whole Integrity. Get To Her. |

I know - this is unintelligible gibberish. It’s still very impressive; given ~ 16 KB of code, the model is even able to use emojis the exact same way I do…

The problem is that this model was born and raised entirely within the universe of my diary - unlike larger models, it hadn’t been exposed to sufficient data to actually build up any language, vocabulary, or knowledge of the outside world…

The human brain has about 100 trillion ($10^{14}$) synaptic connections, and you will live for about $10^9$ seconds; that’s $\sim 10^5$ bits per second that you need to learn in your life. From this crude argument; there’s no way that most of what we know is through supervised learning, but rather by sucking in these audio/visual/sensory experiences throughout out lives. - Andrew Ng

In the same way toddlers learn to speak in 12-18 months, I had just ‘taught’ this model to speak and think, from scratch.

the magic: finetuning

I thought it’d be interesting to contrast these results with fine tuning an existing model like gpt2, which has been trained on OpenWebText; many millions of webpages.

My original method exposed my $10^7$ parameter model to around $10^6$ (2 million) words of knowledge. But by fine tuning, it feels more like I’m teaching a 9 year old a new concept, able to leverage their pre-existing knowledge of language and understanding of the world, rather than ‘training up’ that toddler from scratch.

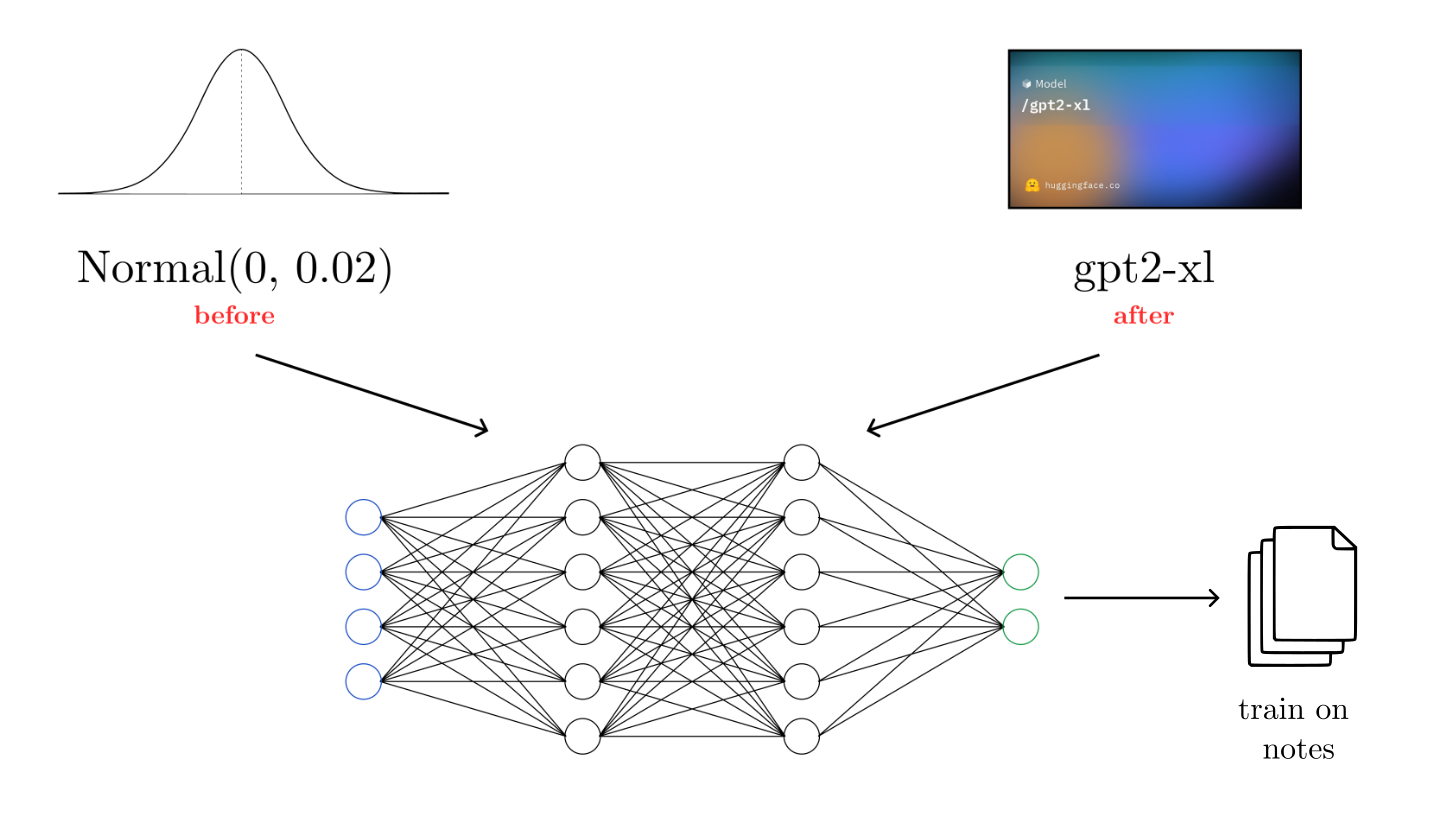

Fine-tuning is exactly the same as training, except instead of initializing the parameters with noise from a $\text{Normal}(0, 0.02)$, we use the weights and biases from gpt2-xl, and then train from there as usual.

| Device | Training Time | Model | Loss |

|---|---|---|---|

| macbook m2, metal performance shaders | 8 hr | gpt2-medium | 4.7 |

As I read the newly generated output, immediately I could tell that it was too familiar, and knew too much about me and my life. So there was clearly a simple bug in the code; my original notes were being printed to the screen.

But as I went to terminate the program, I realized that I was actually seeing an fresh stream of words. My throat went dry.

It was like that jarring feeling of hearing your own voice played on recording. It was awkward to continue reading, having to face the realization that that’s what I sound like.

(Output censored, to protect places/identities/emotions/secrets)

So, it turns out that fine tuning is very effective. While the original output was held back by its incoherence, this was near-indistinguishable from my own writing. But it wasn’t just better next-word prediction in general; it captured my linguistic nuances, and even artifacts of my self and memories.

Reinforced by those millions of webpages it had already read, the model was now able to copy me precisely, asymptotically approaching my own stream of consciousness.

I kept reading. Despite the model reciting real life events, and recalling particular emotions that I felt last week, I felt a growing awareness that it was another entity from which all of these words were streaming. So it felt different to reading one’s own writing - but rather, a deep form of introspection through a cloning of the self. For the first time, instead of writing, I was able to listen. Observing something else struggle to express itself evoked a sense of pity.

The words, despite being randomly generated, held much weight. I understood the Author’s struggle; the pursuit to capture every fleeting thought and feeling through this futile emission of words - a unique kind of words that did not exist to be read. And so I stopped writing.

conclusion

Memory hoarding OCD provides the rare opportunity to replicate your consciousness, as you have unintentionally created the perfect dataset for doing so.

Could it be that in some way the weights and biases in this 355M parameter model mirror my own brain? Probably not. But in some extrinsic way, this little thing is myself.

So, that’s the story of how I accidentally cured my OCD, and cloned my consciousness, or stream of thought, or internal monologue… whatever you call it.